In this article we will learn how to create a new table in Hive using other query output.

We use create table as select statement to create a new table from select query output data.

Syntax is :

create table [table-name] as [select-query]

Practise below steps to unnderstand this feature better.

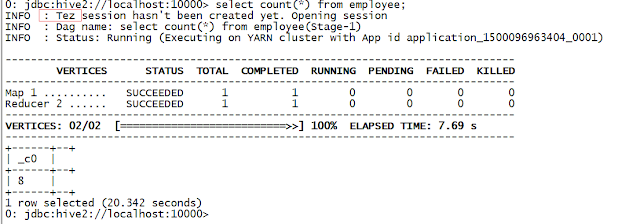

1) Create a hive table called employee using this article.

2) Run a select query to get deptno wise employee count on employee table.

select deptno,count(name) from employee group by deptno

If you want to persist above query output in a hive table , You have to use create table as select (CTAS) query.

3) Create a new table called deptnowiseempcount using above select query output.

create table deptnowiseempcount as

select deptno,count(name) from employee group by deptno;

Table deptnowiseempcount is a managed table.

CTAS has some restrictions like below.

4) The query below fails as the query is trying to create external table using CTAS statement.

create external table deptnowiseempcount as select deptno,count(name) from employee group by deptno;

Query fails throwing an error CREATE-TABLE-AS-SELECT cannot create external table.

We use create table as select statement to create a new table from select query output data.

Syntax is :

create table [table-name] as [select-query]

Practise below steps to unnderstand this feature better.

1) Create a hive table called employee using this article.

2) Run a select query to get deptno wise employee count on employee table.

select deptno,count(name) from employee group by deptno

If you want to persist above query output in a hive table , You have to use create table as select (CTAS) query.

3) Create a new table called deptnowiseempcount using above select query output.

create table deptnowiseempcount as

select deptno,count(name) from employee group by deptno;

Table deptnowiseempcount is a managed table.

CTAS has some restrictions like below.

- New table can not be a external table.

- New table can not be partitioned table.

- New table table can not be a bucketed table.

4) The query below fails as the query is trying to create external table using CTAS statement.

create external table deptnowiseempcount as select deptno,count(name) from employee group by deptno;

Query fails throwing an error CREATE-TABLE-AS-SELECT cannot create external table.