ACLs commands setfacl and getfacl provide advanced permission management in HDFS. We will learn how to enable/disable ACLs in HDFS using Apache Ambari.

ACLs are disabled by default . We need to modify/add ACLs property to enable ACLs in HDFS.

1)

Search hdfs config for dfs.namenode.acls.enabled property in Ambari , you get no results if property not defined yet.

goto HDFS -------- Configs --------- enter dfs.namenode.acls.enabled in filter box

2)

We need to add ACL property dfs.namenode.acls.enabled if not present already.

goto hdfs ----> configs ------> Advanced ------- > custom hdfs-site ----------> Add property -----> enter dfs.namenode.acls.enabled=true ---- Click Add

3)

Click Save button and name latest configuration for example acl property added.

4)

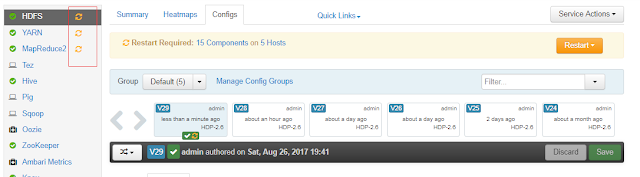

Restart services those show restart symbol.

The following picture shows restart symbol for HDFS, YARN and Mapreduce2 services.

5)

Search property like in step 1 and confirm property is added.

6)

If property is already there just change property value from false to true to enable ACLs in HDFS.

7)

If ACLs already enabled , You can disable by setting property dfs.namenode.acls.enabled to false using same above steps.